PupilCalib

Turning Eye Tracking into 3D!

🔍 Overview: Ever wonder where someone is looking with just a glance? PupilCalib is a unique project that takes the power of eye tracking and projects it into 3D! Built to help shift gaze data from typical eye-tracker devices to new frames of reference, this modular API brings a whole new level of perspective to eye tracking. You could even apply this in augmented reality or let a car “see” where the driver’s focus is through the camera – talk about futuristic! 🚗👀

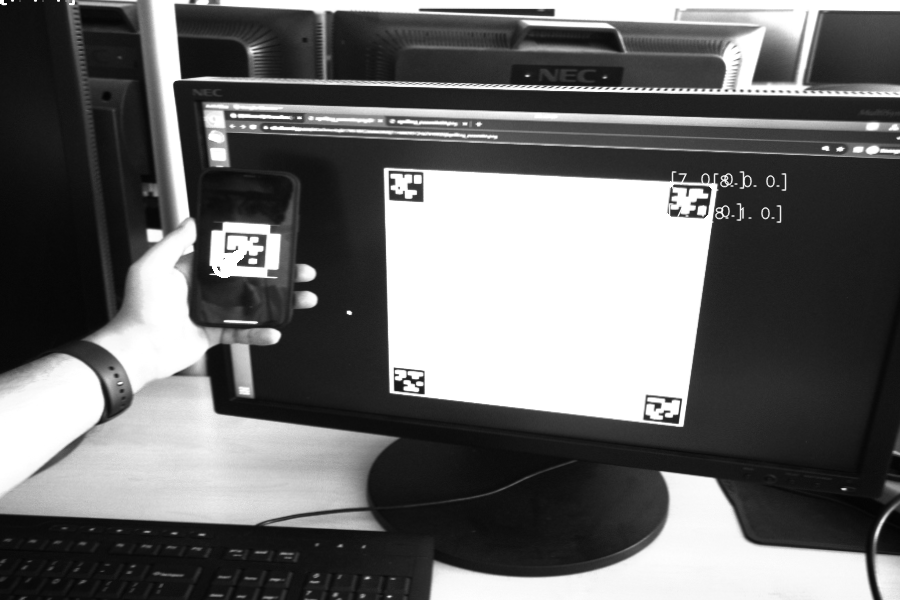

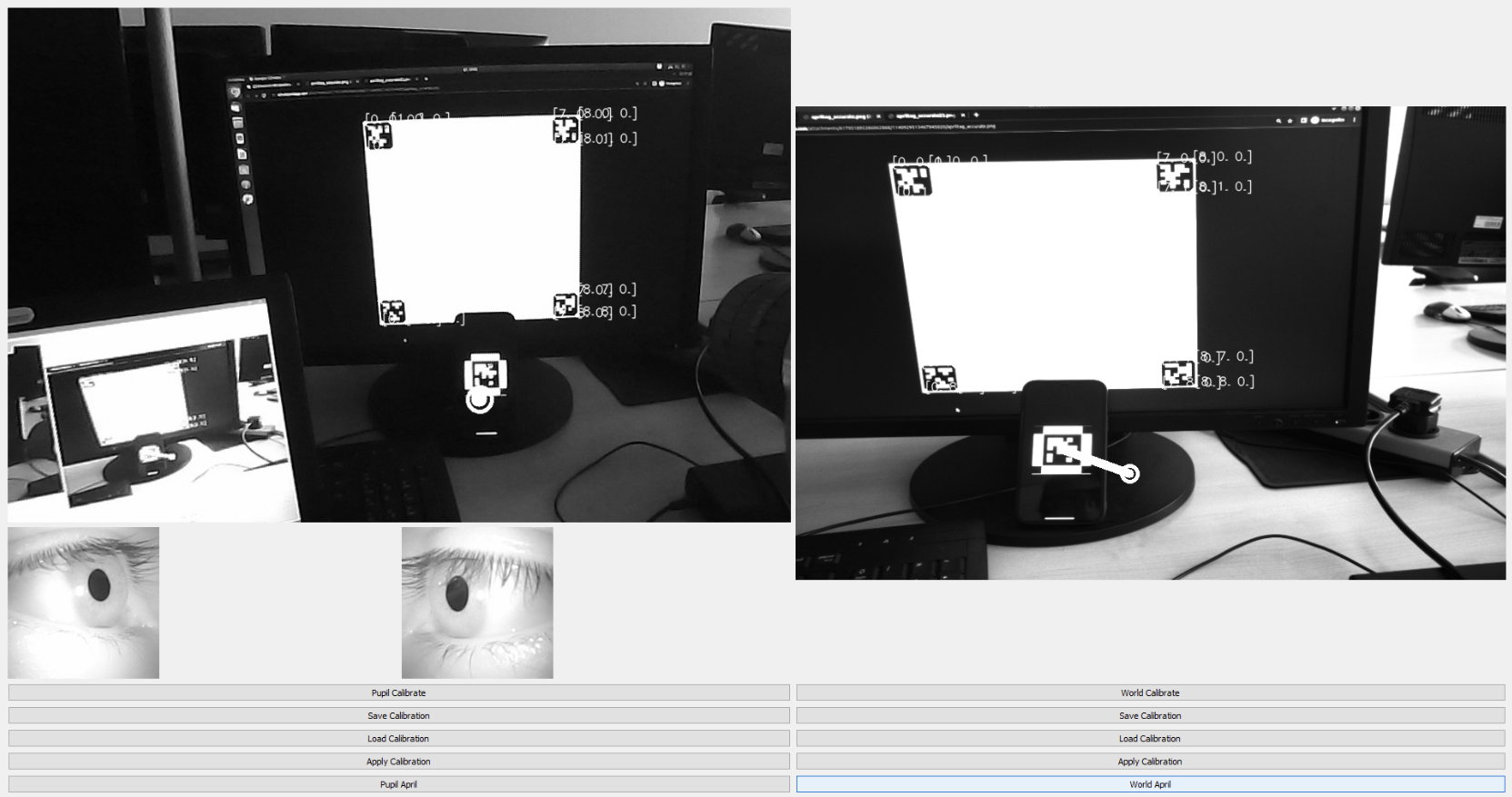

📸 How It Works: With a Pupil Core headset capturing eye movements and an external camera (like an IDS camera) acting as a secondary view, PupilCalib maps where the user is looking in real-time across different setups. Think of it as a “translator” for eye gaze, taking data from one point (like the headset) and projecting it accurately onto another (such as a larger camera view). 🔄

- Camera Calibration 🧩: Using chessboard patterns to align both cameras, it ensures that the gaze data shifts smoothly across different setups, even in tough lighting or distance situations.

- AprilTags 📐: Similar to QR codes, these visual markers give both cameras a shared reference, helping PupilCalib understand depth and location to track the user’s gaze more precisely.

🧪 Tested and True: With trials in various lighting and distances, PupilCalib performs best in well-lit, close-up setups but still manages to hold its own across diverse settings. ⚙️ It’s modular, so with just a bit of customization, you could adapt it for any eye tracker or camera – pretty cool, right?

✨ Why It’s Exciting: From helping machines understand human attention to bridging new virtual experiences, PupilCalib brings exciting possibilities for research and real-world applications. Just plug in, calibrate, and bring eye tracking to life!

📄 Read my full report here: Report Link

🎥 View the presentation here: Presentation Link

💻 Check out the code here: GitHub Repository